acp-mcp

The ACP to MCP Adapter is a lightweight standalone server that acts as a bridge between two AI ecosystems: Agent Communication Protocol (ACP) for agent-to-agent communication and Model Context Protocol (MCP) for connecting AI models to external tools.

The ACP to MCP Adapter is a lightweight standalone server that acts as a bridge between two AI ecosystems: Agent Communication Protocol (ACP) for agent-to-agent communication and Model Context Protocol (MCP) for connecting AI models to external tools. It allows MCP applications (like Claude Desktop) to discover and interact with ACP agents as resources.

Capabilities & Tradeoffs

This adapter enables interoperability between ACP and MCP with specific benefits and tradeoffs:

Benefits

- Makes ACP agents discoverable as MCP resources

- Exposes ACP agent runs as MCP tools

- Bridges two ecosystems with minimal configuration

Current Limitations

- ACP agents become MCP tools instead of conversational peers

- No streaming of incremental updates

- No shared memory across servers

- Basic content translation between formats without support for complex data structures

This adapter is best for situations where you need ACP agents in MCP environments and accept these compromises.

Requirements

- Python 3.11 or higher

- Installed Python packages:

acp-sdk,mcp - An ACP server running (Tip: Follow the ACP quickstart to start one easily)

- An MCP client application (We use Claude Desktop in the quickstart)

Quickstart

1. Run the Adapter

Start the adapter and connect it to your ACP server:

uvx acp-mcp http://localhost:8000

[!NOTE] Replace

http://localhost:8000with your ACP server URL if different.

Prefer Docker?

docker run -i --rm ghcr.io/i-am-bee/acp-mcp http://host.docker.internal:8000

Tip: host.docker.internal allows Docker containers to reach services running on the host (adjust if needed for your setup).

2. Connect via Claude Desktop

To connect via Claude Desktop, follow these steps:

- Open the Claude menu on your computer and navigate to Settings (note: this is separate from the in-app Claude account settings).

- Navigate to Developer > Edit Config

- The config file will be created here:

- macOS:

~/Library/Application Support/Claude/claude_desktop_config.json - Windows:

%APPDATA%\\Claude\\claude_desktop_config.json

- Edit the file with the following:

{

"mcpServers": {

"acp-local": {

"command": "uvx",

"args": ["acp-mcp", "http://localhost:8000"]

}

}

}

Prefer Docker?

{

"mcpServers": {

"acp-docker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"ghcr.io/i-am-bee/acp-mcp",

"http://host.docker.internal:8000"

]

}

}

}

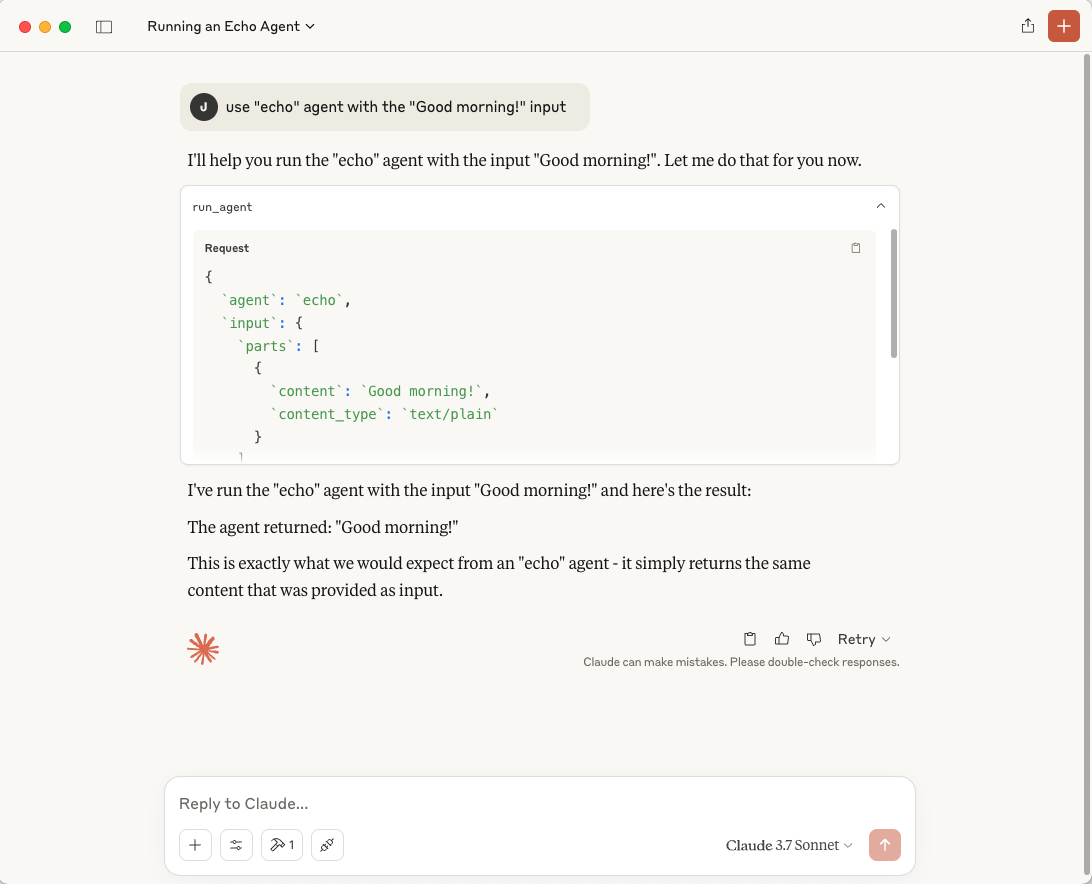

3. Restart Claude Desktop and Invoke Your ACP Agent

After restarting, invoke your ACP agent with:

use "echo" agent with the "Good morning!" input

Accept the integration and observe the agent running.

[!TIP] ACP agents are also registered as MCP resources in Claude Desktop.

To attach them manually, click the Resources icon (two plugs connecting) in the sidebar, labeled "Attach from MCP", then select an agent likeacp://agents/echo.

How It Works

- The adapter connects to your ACP server.

- It automatically discovers all registered ACP agents.

- Each ACP agent is registered in MCP as a resource using the URI:

acp://agents/{agent_name} - The adapter provides a new MCP tool called

run_agent, letting MCP apps easily invoke ACP agents.

Supported Transports

- Currently supports Stdio transport

Developed by contributors to the BeeAI project, this initiative is part of the Linux Foundation AI & Data program. Its development follows open, collaborative, and community-driven practices.

Recommend MCP Servers 💡

data-mcp

Provides tools for web scraping, data extraction, crawling, and access to browser agents via MCP

spotify-mcp-server

A lightweight [Model Context Protocol (MCP)](https://modelcontextprotocol.io) server that enables AI assistants like Cursor & Claude to control Spotify playback and manage playlists.

shrimp-task-manager

An MCP server for intelligent task management for AI-powered development, enabling agents to break down complex projects, maintain context, and accelerate workflows.

@tailor-platform/tailor-mcp

The tailorctl command-line utility, with a focus on MCP (Model Context Protocol) server functionality.

PostHog

Official PostHog MCP Server 🦔

Rember

Rember is an AI-powered flashcard application that integrates with AI chat platforms like Claude and ChatGPT via an MCP Server, allowing users to easily capture and organize information into flashcards for spaced repetition.